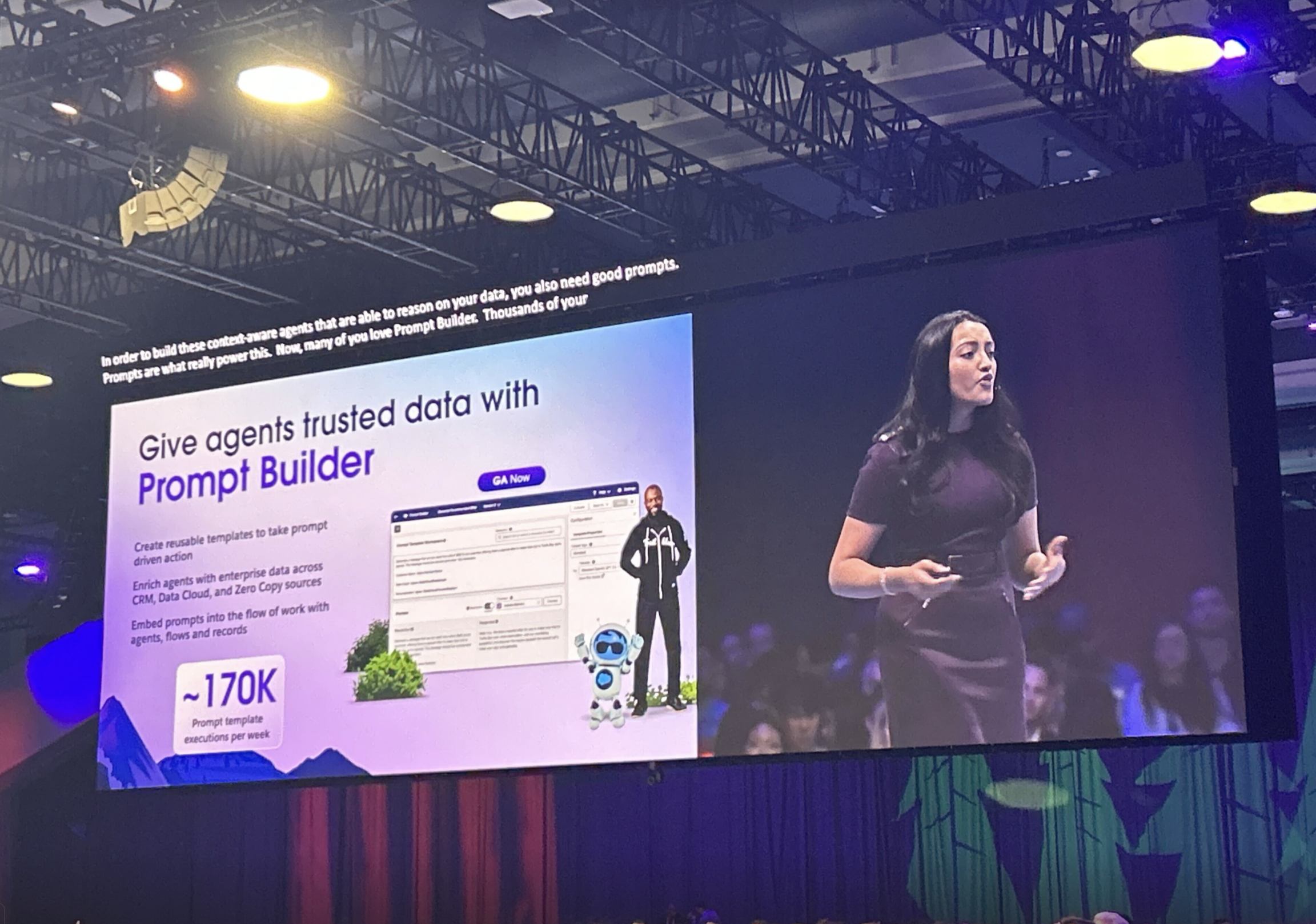

Expanding Prompt Authoring from a Standalone Tool to a Platform Capability

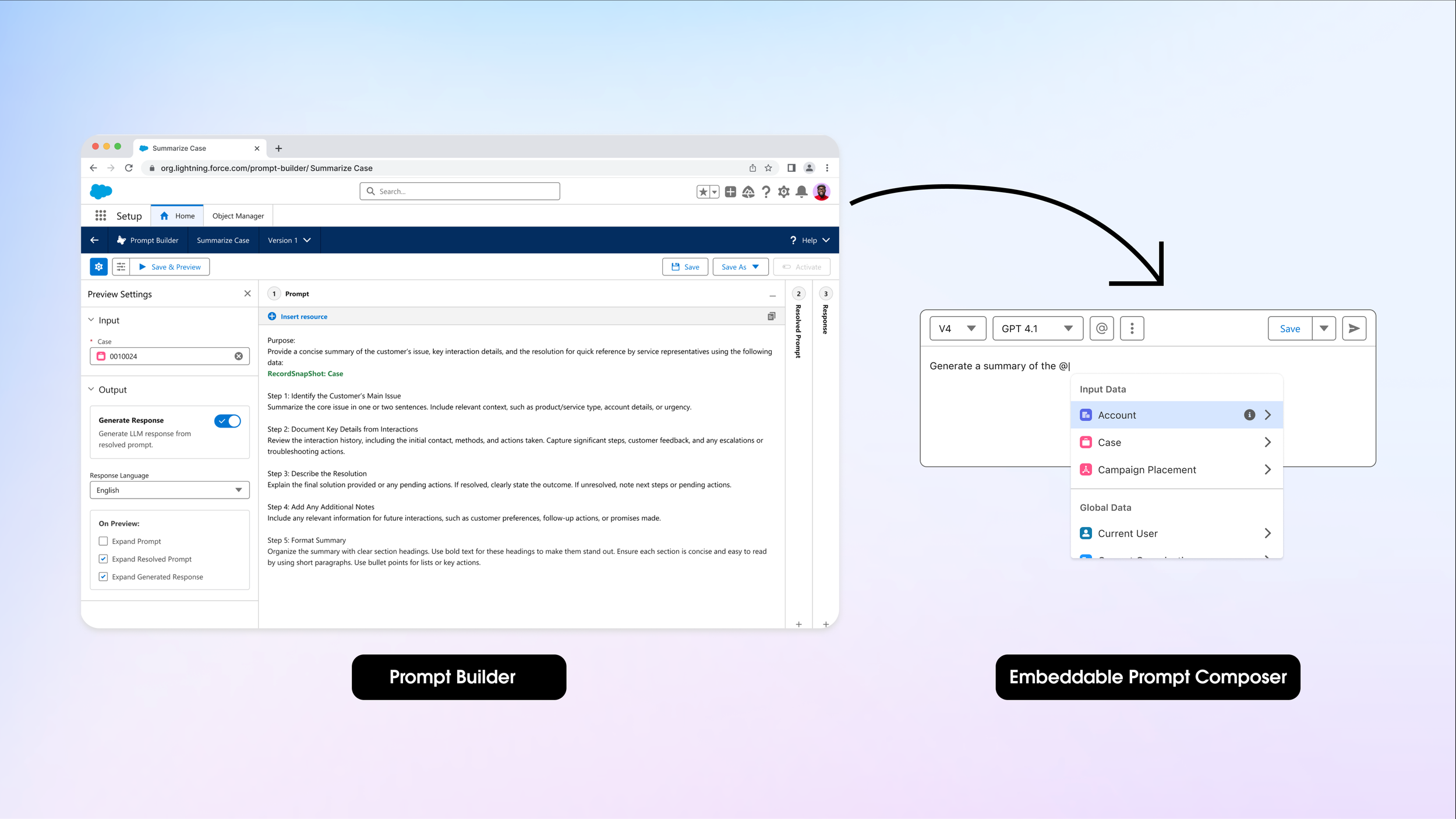

As a UX leader, I helped guide the evolution of Salesforce Prompt Builder from a standalone tool to a platform capability. By applying systems thinking, I reframed Prompt Authoring as a reusable experience, introducing modular components like the Embeddable Prompt Composer that could be leveraged directly in users’ flow of work.

Why the shift was needed

Because prompt authoring in Salesforce was centralized in a monolithic tool, Prompt Builder, teams and end-users couldn’t create, refine, or reuse prompts in their flow of work.

This bottleneck forced teams to duplicate authoring capabilities, leading to inconsistent UIs and fragmented user experiences. As a result, Prompt Builder adoption slowed, while the efficiency of AI-powered features and agents decreased and latency increased.

Co-led and co-facilitated the Go-To-Market and Innovation Workshop as a structured reflection space outside our daily execution. The session aligned the team on long-term vision and near-term adoption challenges, while surfacing risks early and building clarity on increasing adoption opportunities.

Fragmentation revealed that prompt authoring couldn’t remain just a feature inside one tool, it needed to be a foundational platform capability.

The opportunity was to establish patterns and capabilities for prompting LLMs that can be embedded as reusable components across Salesforce experiences.

Our Goals

Increase template creation: Encourage teams and users to save prompts as reusable templates when using or building LLM-powered experiences

Expand access to prompting: Enable non-admins, those who may be most familiar with the business use case, to take advantage of LLM prompting

Increase template usage: Make templates readily available within the flow of work, reducing reliance on custom integrations to give users access to them.

Accelerate AI delivery: Equip internal teams with plug-and-play components, saving sprint cycles of rebuilding functionality we support.

Ensure consistency: Provide users with familiar UI and interaction patterns for prompting LLMs, making it easier to learn and use prompting experiences in Salesforce.

This led to the creation of the Embeddable Prompt Composer.

First, leading through ambiguity

…but, what will an Embeddable Prompt Composer entail, and what impact will it have on the current product?

While there was broad agreement on the need, product, engineering, and UX lacked alignment on its definition, target personas, and use cases. This misalignment created an existential concern: if the new composer simply duplicated the standalone product, why would users adopt it? To provide clarity and alignment,

Partnered with the Prompt Builder product owner to gather cross-team use cases, uncovering where, when, and why embedded prompt authoring would be valuable and how it fits within the existing authoring lifecycle.

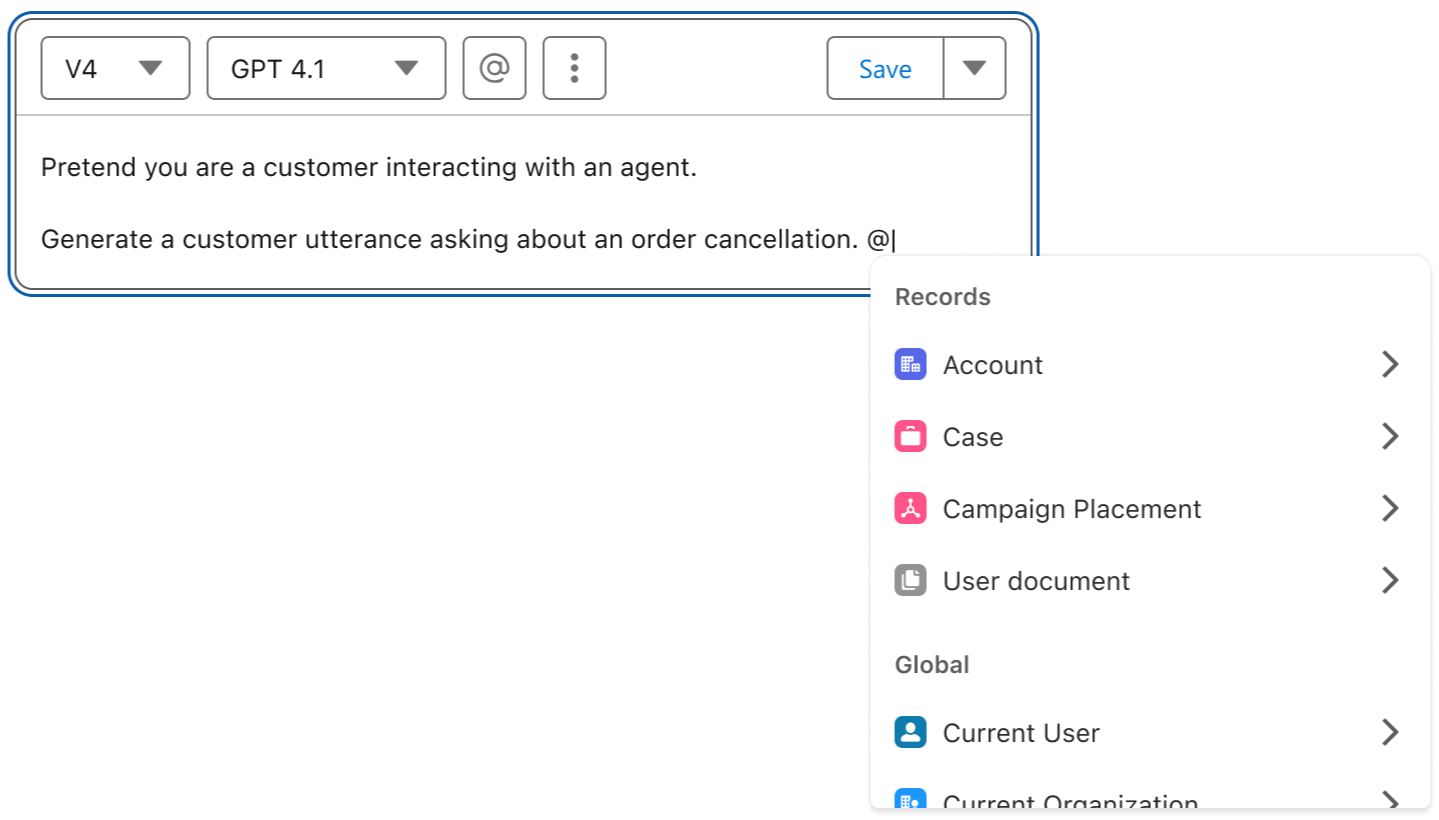

This component will provide a consistent UI for prompt authoring that can be used anywhere from the Lightning App Builder to deep within custom applications. This will accelerate AI delivery for internal teams and expand access to prompt engineering for non-admin users.

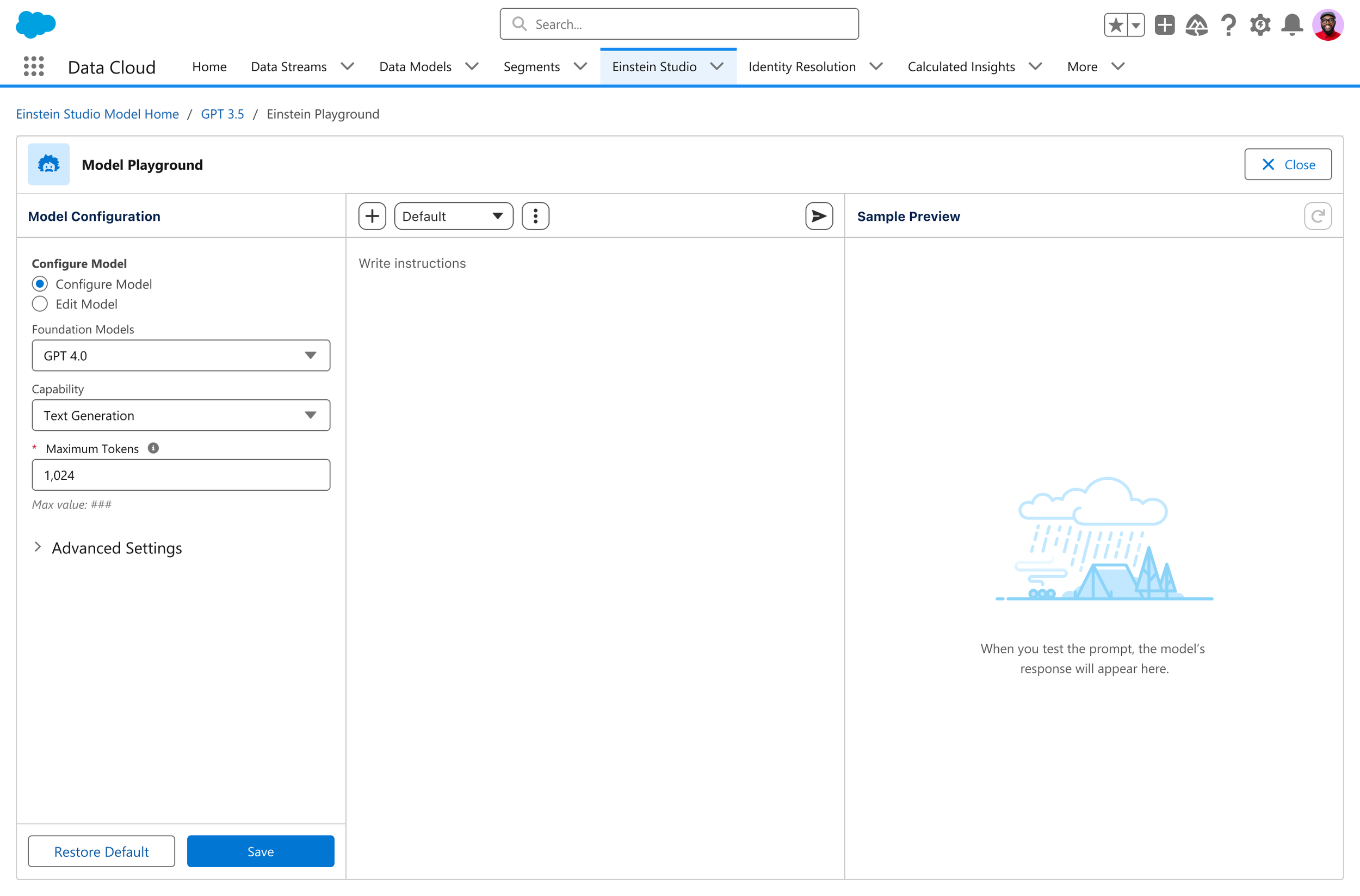

Gathered existing prompt composer explorations, and the competitive landscape. Identifying what to leverage to reduce net-new components and ensure consistent experiences.

Created user flows to guide team conversations and align product and engineering on the emebddable prompt cpmposer definition.

Scaling to a platform capability began to take shape as we aligned on shared components and a unified vision.

That composing a prompt is one task of many that users might carry out when interacting with an LLM.

So we want to provide a set of additional components that can be paired with composing:

Primary Library: will allow users to select an existing template to use as is or modify. Users will have something to build off of when prompting, rather than always starting from scratch.

Prompt Execution: will provide a mechanism for users to run prompt templates. It takes in a template ID and any inputs of the template to generate a response.

Prompt Response: will allow users to preview the response that’s been generated.

Save prompt for reuse: Save the prompt as a prompt template for future use in similar tasks.

Because different applications had varying product requirements, a single, one-size-fits-all Prompt Composer would not scale effectively.

Workbook only supports one-time prompts that use data from the current columns and display the response within the sheet.

Interaction Explorer wants their users to write instructions and preview responses as they create custom tags to classify moments in agent conversations.

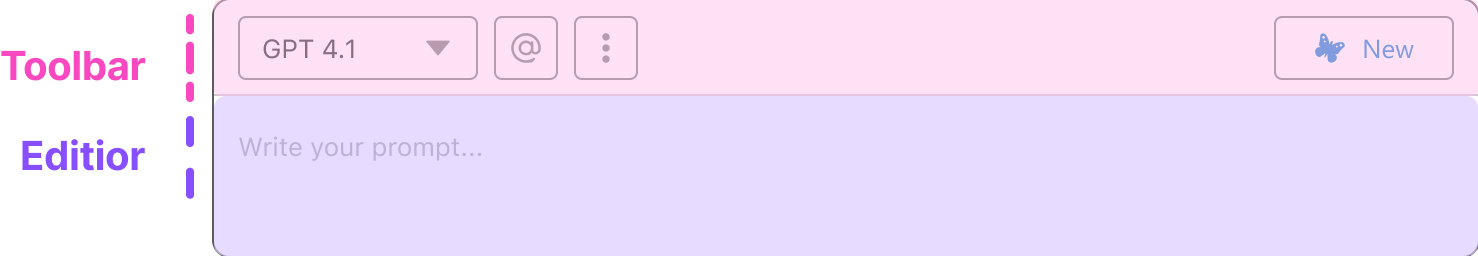

We defined a set of guiding principles that became our guiding star across UX, product, and engineering:

Modularity: Break the Prompt Composer into smaller building blocks, so it’s easier to build, debug, and maintain over time.

Reusability: Design it so the component works anywhere in Salesforce, not just in one place or context.

Decoupled Data Layer: Use Salesforce’s modern data service, Lightning Data Service (LDS) to handle data, instead of older, more complex approaches, making the component faster, lighter, and future-proof.

Through continuous design iterations and usability testing, we defined the foundational building blocks of Prompt Composer.

Embeddable Prompt Composer: A step toward platform capability

For the first release of the Composer, we partnered with the Workbench team, an neapplication designed to boost end-user productivity by enabling anyone to quickly leverage LLMs to analyze and draw insights from data. Their goal was to let users define custom prompts inline and on-the-fly, while also giving them the ability to reuse existing prompt templates. Embedding Prompt Composer into Workbench became a critical proof point that prompt authoring could scale beyond a single product and serve as a platform-wide capability.

The Embeddable Prompt Composer is going live in October 2025.

While product teams partnered with cross-functional stakeholders to address inline prompt authoring needs within their applications, UX worked closely with design counterparts from those applications to ensure seamless experiences and define how the embeddable composer would function for them.

Users want the ability to save effective prompts with their model configurations directly from Model Builder, or seamlessly redirect into Prompt Builder for further refinement.

Embedding the Prompt Composer in Interaction Explorer would allow users to write inline instructions and instantly preview responses as they create custom tags to classify moments in agent conversations.

Lessons learned

Lead with a Point of View: Entering discussions with a perspective helps orient the conversation and accelerate alignment.

Think Like a System, Not a Feature: Building a platform capability requires systems thinking. It’s not just about what serves a single team or user, but what scales across the ecosystem.

Seek Clarity Relentlessly: If something isn’t clear, ask in multiple ways. Chances are, others share the same confusion, and surfacing it early creates shared understanding.

The people behind the platform

Scaling Prompt Builder into a platform capability was not only about components and architecture, it was about people. The journey was fueled by cross-functional collaboration, champions who believed in the vision, and teams who celebrated every milestone along the way. 🙏